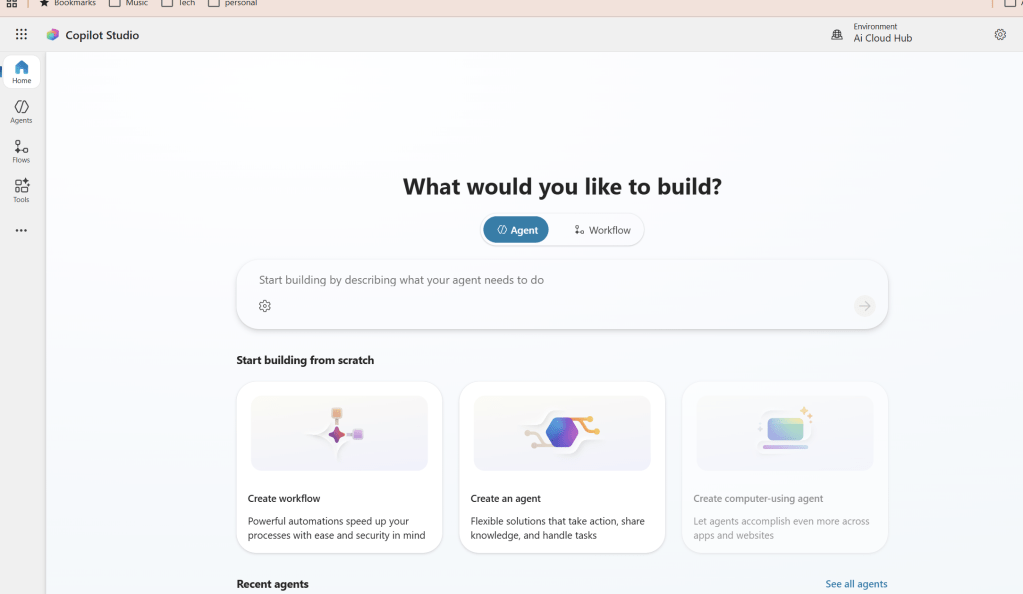

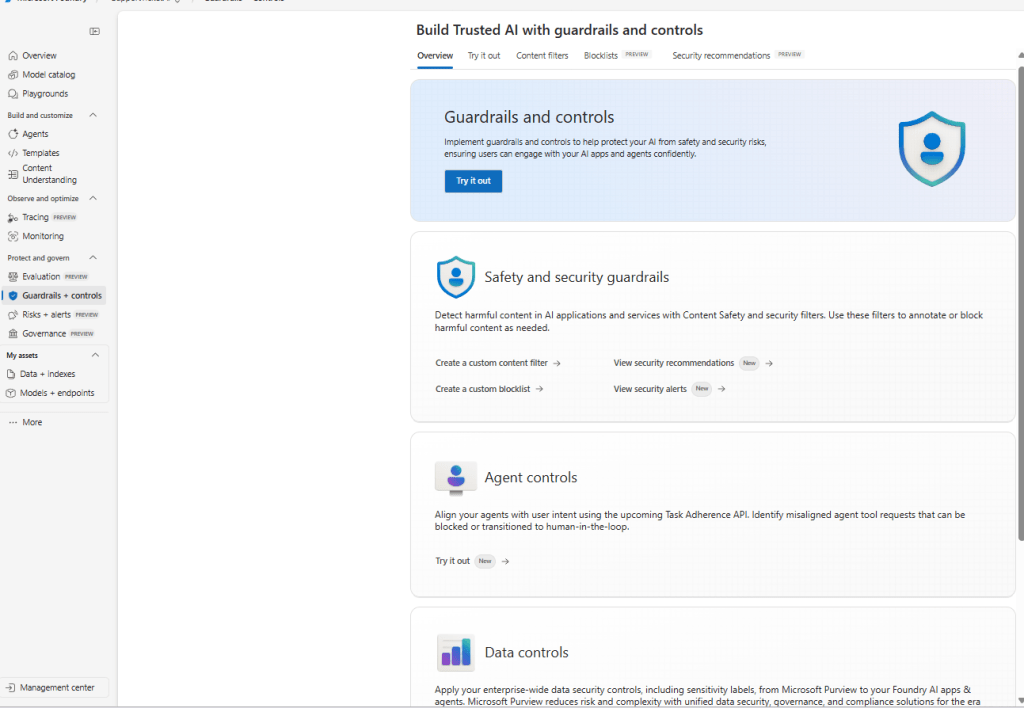

In this blog we will go through the Azure AI foundry Portal and its capabilities .The new Azure AI Foundry portal brings model experimentation, agent building, data grounding, and safety controls into a single, coherent workspace. It’s designed so builders can move from idea to prototype to hardened agent without context switching.

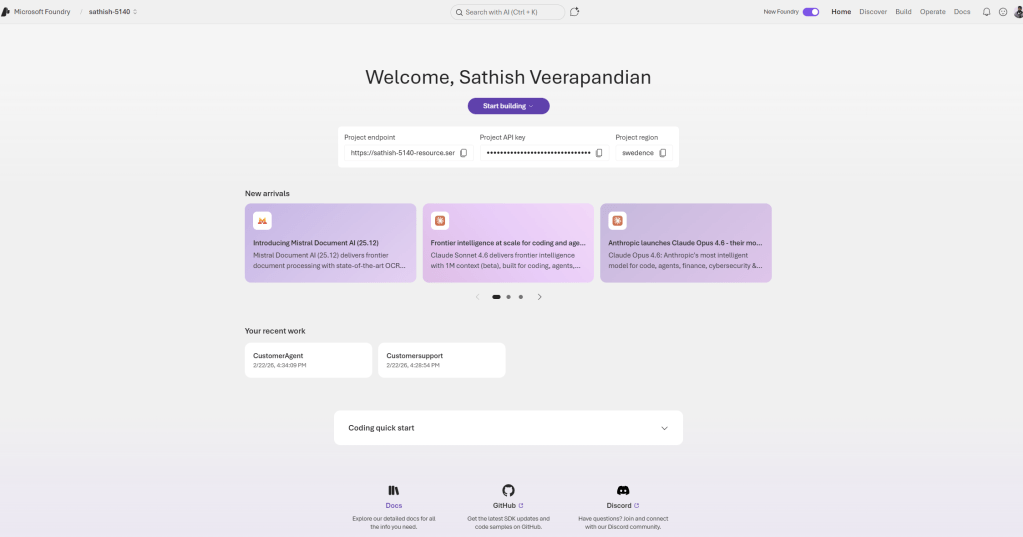

The first thing when we login is we need to switch on the toggle new foundry and it totally brings altogether a new interface and lands us to the dashboard.

This dashboard is the Foundry project home for a developer or team building AI agents. It surfaces the project endpoint and API key for integration, shows the project region, and highlights recent model and tooling updates so teams can stay current. The page also lists recent projects and provides quick links to documentation and community resources, making it a practical launchpad for both prototyping and production work.

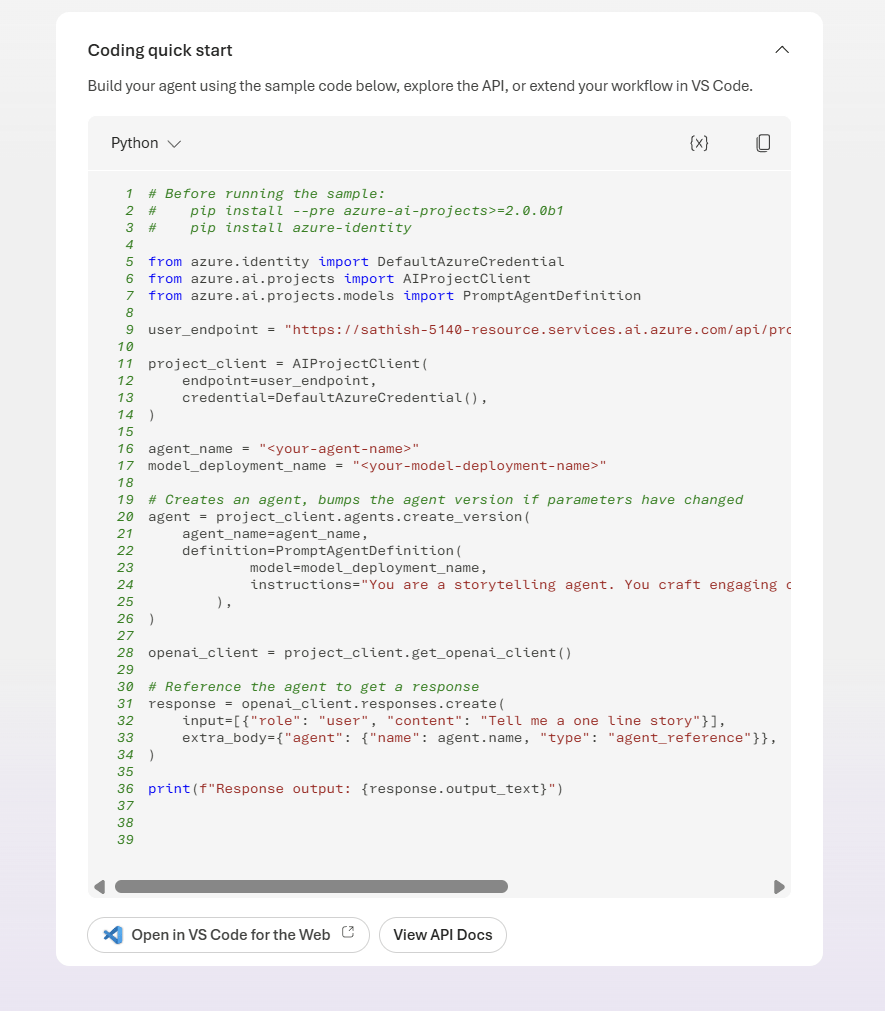

In the coding quick start we have the option coding quick start. We can open in vs code for the web.